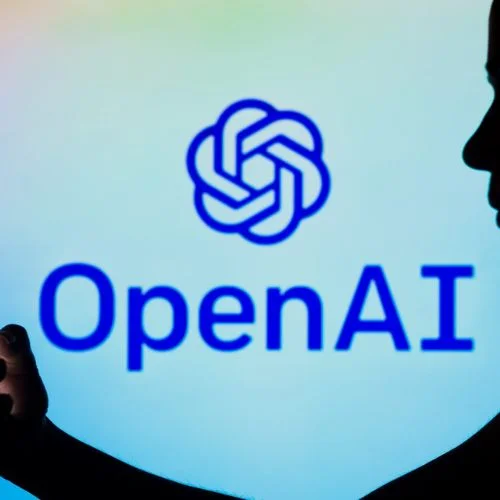

Researchers observed ChatGPT’s limitations in providing location-specific information regarding environmental justice problems, which they believe may indicate the chatbot’s geographic biases.

The researchers at Virginia Tech University in the United States invited ChatGPT to respond to a prompt regarding environmental justice problems in each of the country’s 3,108 counties.

ChatGPT is an OpenAI-developed generative artificial intelligence (AI) tool that has been extensively trained on huge volumes of natural language data. Such artificial intelligence systems, also known as massive language models, may interpret, alter, and create textual answers depending on user requests, or “prompts.”

While ChatGPT demonstrated the potential to detect location-specific environmental justice difficulties in big, high-density population regions, the researchers discovered that the tool had limits when it came to local environmental justice issues.

They claimed that the AI model could only deliver location-specific information for roughly 17%, or 515, of the total 3,018 counties queried. Their results have been reported in Telematics and Informatics.

“We need to investigate the technology’s limitations so that future developers are aware of the possibility of bias.” That was the motivating impetus behind our research,” said Junghwan Kim, an assistant professor at Virginia Tech and the study’s corresponding author.

The researchers said that they picked environmental justice as the focus of their inquiry to broaden the variety of issues often used to evaluate the efficacy of generative AI systems.

Environmental justice is defined by the US Department of Energy as “the fair treatment and meaningful participation of all people, regardless of race, color, national origin, or income, in the development, implementation, and enforcement of environmental laws, regulations, and policies.“

According to the researchers, asking questions by county enabled them to compare ChatGPT replies to sociodemographic data such as population density and median family income. The counties they studied had populations ranging from 1,00,19,635 (Los Angeles County, California) to 83 (Loving County, Texas).

The researchers discovered that in remote states like Idaho and New Hampshire, more than 90% of the population resided in counties that were unable to obtain local-specific information.

In contrast, in states with bigger metropolitan populations, such as Delaware or California, less than 1% of the population lives in counties that cannot obtain precise information, according to the study.

“While more research is needed, our findings show that geographic biases exist in the ChatGPT model,” said Kim, a professor in the Department of Geography.

According to research co-author Ismini Lourentzou, who teaches in the Department of Computer Science, the results raised concerns about the “reliability and resiliency of large-language models.”

“This is a starting point to investigate how programmers and AI developers might be able to anticipate and mitigate the disparity of information between big and small cities, between urban and rural environments,” he said. IJT PTI KRS